Today is the day that we’ll be putting it all together! In the past few posts, we’ve been looking at different types of text fields and buttons; now we’ll discuss testing a form as a whole.

There are as many different ways to test forms as there are text field types! And unfortunately, testing forms is not particularly exciting. Because of this, it’s helpful to have a systematic way to test forms that will get you through it quickly, while making sure to test all the critical functionality.

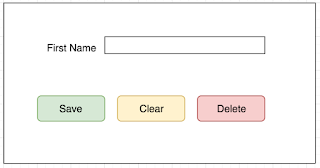

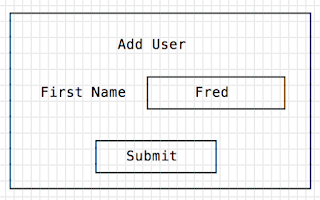

Let’s take a look at the form below, and I will walk you through a systematic approach to testing it. For simplicity, we’ll assume that the users will all be US-based. (See my previous posts for discussions on testing international postal codes and phone numbers.)

Step One: Required Fields

The first thing I do when I test a form is to make note of which fields are required. This particular form denotes required fields with a red asterisk. We can see that First Name, Last Name, Personal Phone, Street Address 1, City, State, and Zip Code are all required. I want to verify that the form cannot be submitted when a required field is missing and that I’m notified of which field is missing when I attempt to submit the form.

1. I click the Save button when no fields have been filled out. I make sure that all of the required fields have error messages.

2. I fill out the two non-required fields, click the Save button, and verify that all the required fields have error messages.

3. I fill out one required field and click the Save button, and I verify that all the other fields have error messages. I start with submitting just the First Name, then just the Last Name, then just the Personal Phone, etc., until I have cycled through all of the required fields.

4. I try various combinations of two, three, four, and five required fields to make sure the appropriate error messages are displayed. I don’t concern myself with testing every possible combination, since it’s highly likely that the required field will behave similarly in these situations.

5. I try filling out all the required fields but one, and verify that I still receive an error for that field. I cycle through all the possibilities of having one missing required field.

6. I fill out all of the fields- required and non-required- except for one required field, and verify that I still receive an error.

7. I fill out all of required fields and click the Save button, and I verify that no error messages appear and that the entered fields have been saved correctly to the database.

8. Finally, I fill out all of the fields- required and non-required, and I verify that no error messages appear and that the entered fields have been saved correctly to the database.

Step Two: Field Validation

The next thing I do is verify that each individual text box has appropriate validation on it. First, for each text field, I discover what the upper and lower character limits are. I enter in all the required fields except for the one text box I am testing. Then in that text box:

1. I try entering just one character

2. I try entering the lower limit of characters, minus one character

3. I try entering the upper limit of characters, plus one character

4. I try entering a number of characters far beyond the upper limit

In all of these instances, the form should not save, and I should receive an appropriate error message.

Next:

5. I enter the lower limit of characters, and verify that the form is saved correctly

6. I enter the upper limit of characters, and verify that the form is saved correctly

Now that I have confirmed that the limits on characters are respected, it’s time to try various letters, numbers and symbols. For each text field, I find out what kinds of letters, numbers, or symbols are allowed. For example, the First and Last Name fields should allow apostrophes (ex. O’Connor) and hyphens (ex. Smith-Clark), but should probably allow no numbers or symbols. For each text field:

1. I try entering all of the allowed letters, numbers and symbols

2. I try entering the letters, numbers, or symbols that are not allowed, one at a time, until I have verified that they are all not allowed

For fields that have very specific accepted formats, I test those formats now. For instance, I should be able to enter a Zip Code of 03773-2817, but not one of 03773-28. For the State field, the two-letter code I put into the field should be a valid state, so I should be able to enter MA, but not XX.

Even though I’ve already tested all the forbidden numbers and symbols, I try a few cross-site scripting and SQL injection examples, to make sure no malicious code gets through. (More on this in a future post.)

Step Three: Buttons

Even though I have already used the Save button dozens of times at this point, there are still a few things left to test here. And I have not yet used the Cancel button. So:

1. I click the Save button several times at once, and verify that only one instance of the data is saved

2. I click the Save button and then the Cancel button very quickly, and verify that the data is saved and that there are no errors

3. I click the Cancel button when no data has been entered, and verify that there are no errors

4. I click the Cancel button when data has been entered, and verify that the data is cleared. I’ll try this several times, with various combinations of required and non-required fields.

5. I click the Cancel button several times quickly, and verify that there are no errors

Step Four: Data

Finally, it’s time to look at the data that is saved. In earlier steps, I’ve already done some quick checks to determine if the data that I am entering is saved to the database. Now I’ll check:

1. That bad data I’ve entered is not saved to the database

2. That good data I’ve entered is saved to the database correctly

3. That good data I’ve entered is retrieved from the database correctly, and displayed correctly on the form

If the form I am testing has Edit and Delete functionality, it’s important to test these as well, but I’ve covered these in my post CRUD Testing Part II- Update and Delete.

Once I’ve gone through a form in this systematic way, it’s almost certain I will have found a few bugs to keep the developers busy. It’s tedious, yes, but once the bugs have been fixed I can move on to automation testing with confidence, knowing that I have really put this form through its paces!