It’s very easy when you are rushing to complete features to let some bugs slide. This article will show why in most cases it’s better to fix all the bugs now rather than later. The following scenario is hypothetical, but is based on my experience as a tester.

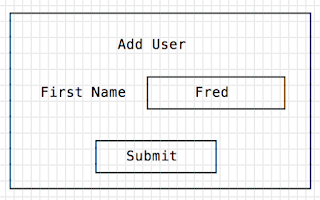

NewTech Inc. is very excited about offering a new email editor to their customers. Customers will be able to compose emails to their clients and schedule when they should be sent from within the NewTech app. NewTech’s service reps will also have the ability to add or change a company logo for the customers.

Because the feature is on a deadline, developers are rushing to complete the work. The QA engineer finds an issue: when changing the company logo, the logo doesn’t appear to have changed unless the user logs out and back in again. The team discusses this issue and decides that because customers won’t see the issue (since it’s functionality that only NewTech employees can use), it’s safe to let this issue go on the backlog to be fixed at another time.

The feature is released, and customers begin using it. The customers would all like to add their company logo to their emails, so they begin calling the NewTech service reps asking for this service. The service reps add the logo and save, but they don’t see the logo appear on the email config page. The dev team has forgotten to let them know that there’s a bug here, and that the workaround is to log out and back in again.

Let’s assume that each time a service provider encounters the issue and emails someone on the dev team about it, five minutes is wasted. If there are ten service reps on the team, that’s fifty wasted minutes.

Total time wasted to date: Fifty minutes

But now everyone knows about the issue, so it won’t be a problem anymore, right? Wrong! Because NewTech has hired two new QA engineers. Neither one of them knows about the issue. They encounter it in their testing, and ask the original QA engineer about it. “Oh, that’s a known issue,” he replies. “It’s on the backlog.” Time wasted: ten minutes for each new QA engineer to investigate the problem, and ten minutes for each new engineer to ask the first QA engineer about it.

Total time wasted to date: One hour and ten minutes

Next, a couple of new service reps are hired. At some point, they each get a request to from a company to change the company’s logo. When they go to make the change, the logo doesn’t update. They don’t know what’s going on, so they ask their fellow service reps. “Oh yeah, that’s a bug,” say the senior service reps. “You just need to log out and back in again.” Time wasted: ten minutes for each new service provider to be confused about what’s going on, and ten more minutes in conversation with the senior service reps.

Total time wasted to date: One hour and thirty minutes

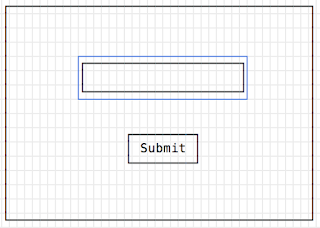

It’s time to add new features to the application. NewTech decides to give their end users the option of adding a profile picture to their account. A new dev is tasked with adding this functionality. He sees that there’s an existing method to add an image to the application, so he chooses to call that method to add the profile picture. He doesn’t know about the login/logout bug. When one of the QA engineers tests the new feature, she finds that profile picture images don’t refresh unless she logs out and back in again. She logs a new bug for the issue. Investigating the problem and reporting it takes twenty minutes.

Total time wasted to date: One hour and fifty minutes

The dev team meets and decides that because customers will see the issue, it’s worth fixing. The dev who is assigned to fix the issue is a different dev from the one who wrote the image-adding method (who has since moved on to another company), so it takes her a while to familiarize herself with the code. Time spent fixing the issue: two hours.

Total time wasted to date: Three hours and fifty minutes

The dev who fixed the issue didn’t realize that the bug existed for the company logo as well, so didn’t mention it to the QA engineer assigned to test her bug fix. The QA engineer tests the bug fix and finds that it works correctly, so she closes the issue. Time spent testing the fix: thirty minutes.

Total time wasted to date: Four hours and twenty minutes

When it’s time for the new feature to be released, the QA team does regression testing. They discover that there is now a new issue on the email page: because of the fix for the profile images, now the email page refreshes when customers make edits, and the company logo disappears from the page. One of the QA engineers logs a separate bug for this issue. Time spent investigating the problem and logging the issue: thirty minutes.

Total time wasted to date: Four hours and fifty minutes

The dev team realizes that this is an issue that will have significant impact on customers, so the developer quickly starts working on a fix. Now she realizes that the code she is working on affects both the profile page and the email page, so she spends time checking her fix on both pages. She advises the QA team to be sure test both pages as well. Time spent fixing the problem: one hour. Time spent testing the fixes: one hour.

Total time wasted to date: Six hours and fifty minutes

How much time would it have taken for the original developer to fix the original issue? Let’s say thirty minutes, because he was already working with the code. How much time would it have taken to test the fix? Probably thirty minutes, because the QA engineer was already testing that page, and the code was not used elsewhere.

So, by fixing the original issue when it was found, NewTech would have saved nearly six hours in work that could have been spent on other things. This doesn’t seem like a lot, but when considering the number of features in an application, it really adds up. And this scenario doesn’t account for lost productivity from interruptions. If a developer is fielding questions from the service reps all day about known issues that weren’t fixed because they weren’t customer-facing bugs, it’s hard for him to stay focused on the coding he’s doing.

The moral of the story is: unless you think that no user, internal or external, will ever encounter the issue, fix things when you find them!