In last week’s post, we took a look at a hypothetical software feature which would sort out Superballs among four children according to a set of rules. I came up with forty-five different test cases from simple to complicated that would test various combinations of the rules.

But a blog post is not a very easy way to read or execute on a test plan! So in this week’s post, I’ll discuss the techniques I use to organize a test plan, and also discuss ways that I find less effective.

What I Don’t Do

1. I don’t write up step by step instructions, such as

Navigate to the login page

Enter the username into the username field

etc. etc.

Unless you are writing a test plan for someone you have never met, who you will never talk to, and who has never seen the application, this is completely unnecessary. While some level of instruction is important when your test plan is meant for other people, it’s safe to assume that you can provide some documentation about the feature elsewhere.

2. I don’t add screenshots to the instructions.

While having screenshots in documentation is helpful, when they are in a test plan it just makes the plan larger and harder to read.

3. I don’t use a complicated test tracking system.

In my experience, test tracking systems require more time to maintain than the time needed to actually run the tests. If there are regression tests that need to be run periodically, they should be automated. Anything that can’t be automated can be put in a one-page test plan for anyone to use when the need arises.

What I Do:

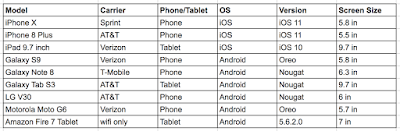

1. I use a spreadsheet to organize my tests.

Spreadsheets are so wonderful, because the table cells are already built in. They are easy to use, easy to edit, and easy to share. For test plans that I will be running myself, I use an Excel spreadsheet. For plans that I will be sharing with my team, I use a table in a Confluence page that we can all edit.

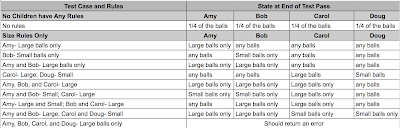

You can see the test spreadsheet I created for our hypothetical Superball sorter here. I’ll also be including some screenshots in this post.

2. I keep the instructions simple.

In the screenshot below, you can see the first two sections of my test:

In the second test case, I’ve called the test “Amy- Large balls only”. This is enough for me to know that what I’m doing here is setting a rule for Amy that she should accept Large balls only. I don’t need to write “Create a rule for Amy that says that she should accept Large balls only, and then run the ball distribution to pass out the balls”. All of that is assumed from the description of the feature that I included in last week’s post.

Similarly, I’ve created a grouping of four columns called “State at End of Test Pass”. There is a column for each child, and in each cell I’ve included what the expected result should be for that particular test case. For example, in the third test case, I’ve set Amy, Carol, and Doug to “any balls”, and Bob to “Small balls only”. This means that Amy, Carol, and Doug can have any kind of balls at all at the end of the test pass, and Bob should have only Small balls. I don’t need to write “Examine all of Bob’s balls and verify that they are all Small”. “Small balls only” is enough to convey this.

3. I use headers to make the test readable.

Because this test plan has forty-five test cases, I need to scroll through it to see all the tests. Because of this, I make sure that every section has good headers so I don’t have to remember what the header values are at the top of the page.

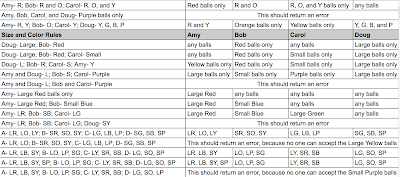

In the above example, you can see the end of one test section and the beginning of the final test section, “Size and Color Rules”. I’ve put in the headers for Amy, Bob, Carol, and Doug, so that I don’t have to scroll back up to the top to see which column is which.

4. I keep the chart cells small to keep the test readable.

As you can see below, as the test cases become more complex, I’ve added abbreviations so that the test cases don’t take up too much space:

After running several tests, it’s pretty easy to remember that “A” equals “Amy” and “LR” equals “Large Red”.

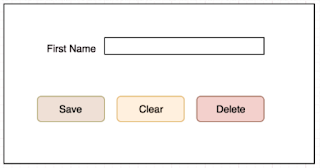

5. I use colors to indicate a passing or failing test. The great thing about a simple test plan is that it’s easy to use it as a report for others. I’ll be talking about bug and test reports in a future post, but for now it’s enough to know that a completed test plan like this will be easy for anyone to read. Here’s an example of what the third section of the test might look like when it’s completed, if all of the tests pass:

If a test fails, it’s marked in red. If there are any extra details that need to be added, I don’t put them in the cell, making the chart hard to read; instead, I add notes on the side that others can read if they want more detail.

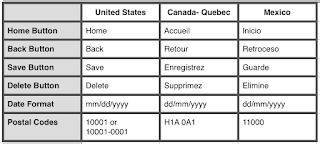

6. I use tabs for different environments or scenarios.

The great thing about spreadsheets such as Google Sheets or Excel is that they offer the use of tabs. If you are testing a feature in your QA, Staging, and Prod environments, you can have a tab for each environment, and copy and paste the test plan in each. Or you can use the tabs for different scenarios. In the case of our Superball Sorter test plan, we might want to have a tab for testing with a test run of 20 Superballs, one for 100 Superballs, and one for 500 Superballs.

Test plans should be easy to read, easy to follow, and easy to use when documenting results. You don’t need fancy test tools to create them; just a simple spreadsheet, an organized mindset, and an ability to simplify instructions are all you need.

Looking over the forty-five test cases in this plan, you may be saying to yourself, “This would be fine to run once, but I wouldn’t want to have to run it with every release.” That’s where automation comes in! In next week’s post, I’ll talk about how I would automated this test plan so regression testing will take care of itself.